A Webinar on “BIG DATA ANALYTICS- TOOLS and TECHNIQUES”

Big data analytics is the often difficult process of analysing large amounts of data in order to identify information such as hidden patterns, correlations, market trends, and customer preferences that can assist businesses in making better decisions.

Data analytics tools and approaches provide organisations with a way to evaluate data sets and obtain new information on a large scale. Basic questions regarding business operations and performance are answered by business intelligence (BI) queries.

Big data analytics is a type of advanced analytics that entails complicated applications that use analytics systems to power aspects like predictive models, statistical algorithms, and what-if analyses.

History and growth of big data analytics:

In the mid-1990s, the term "big data" was coined to describe growing data volumes. Doug Laney, then an analyst at Meta Group Inc., broadened the notion of big data in 2001.

The 3Vs of big data were named after these three factors. After acquiring Meta Group and recruiting Laney in 2005, Gartner pushed this concept. The launch of the Hadoop distributed processing framework was another key event in the history of big data. In 2006, Hadoop was released as an Apache open source project.

Big data analytics has recently gained traction among a wider range of users as a vital technology for enabling digital transformation. Retailers, financial services corporations, insurers, healthcare organisations, manufacturers, energy companies, and other businesses are among the users.

Why is big data analytics important?

Big data analytics technologies and software can help businesses make data-driven decisions that improve business outcomes. More effective marketing, additional revenue opportunities, customer personalisation, and increased operational efficiency are all possible benefits. These advantages can provide competitive advantages over competitors with the right strategy.

How does big data analytics work?

Data analysts, data scientists, predictive modellers, statisticians, and other analytics experts collect, process, clean, and analyse increasing volumes of structured transaction data, as well as other types of data not typically used by traditional BI and analytics tools.

four steps of the data preparation process:

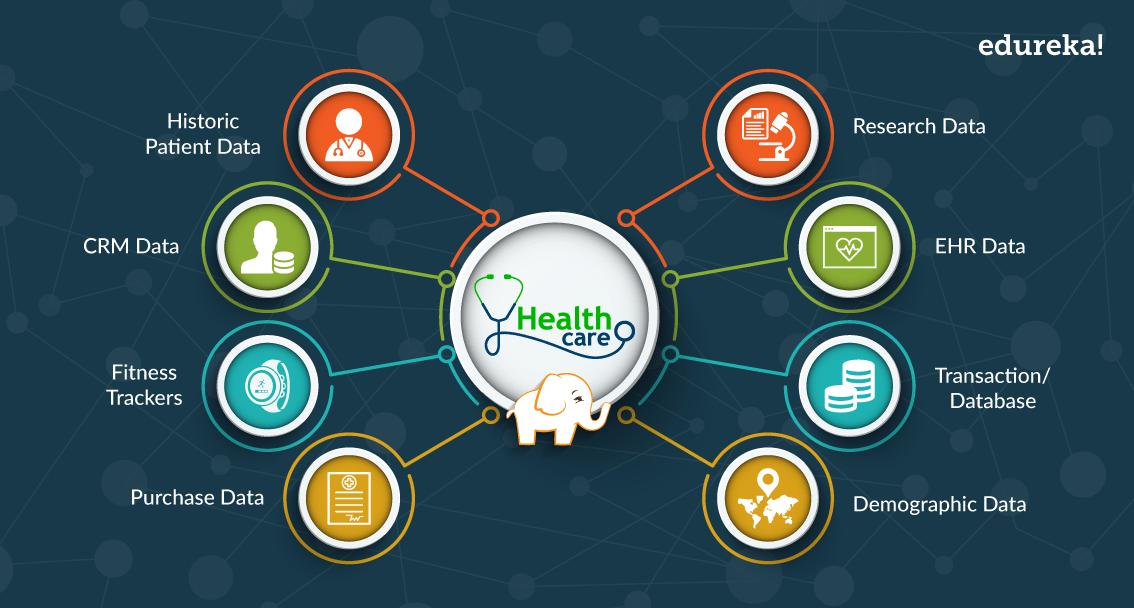

1) Data professionals collect data from a variety of different sources. Often, it is a mix of semi-structured and unstructured data. While each organization will use different data streams, some common sources include:

2) Data is analysed. Data professionals must organise, arrange, and segment data effectively for analytical queries after it has been acquired and stored in a data warehouse or data lake. Analytical queries perform better when data is processed thoroughly.

3) The data is sanitised to ensure its quality. Data scrubbers use scripting tools or corporate software to clean up the data. They organise and tidy up the data, looking for any faults or inconsistencies such as duplications or formatting errors.

4) Analytics software is used to analyse the data that has been collected, processed, and cleaned. This contains items such as:

big data analytics technologies and tools:

Big data analytics procedures are supported by a variety of tools and technology. The following are some of the most common technologies and techniques used to facilitate big data analytics processes:

Hadoop: Hadoop is a free and open-source platform for storing and analysing large amounts of data. Hadoop can handle both organised and unstructured data in enormous quantities.

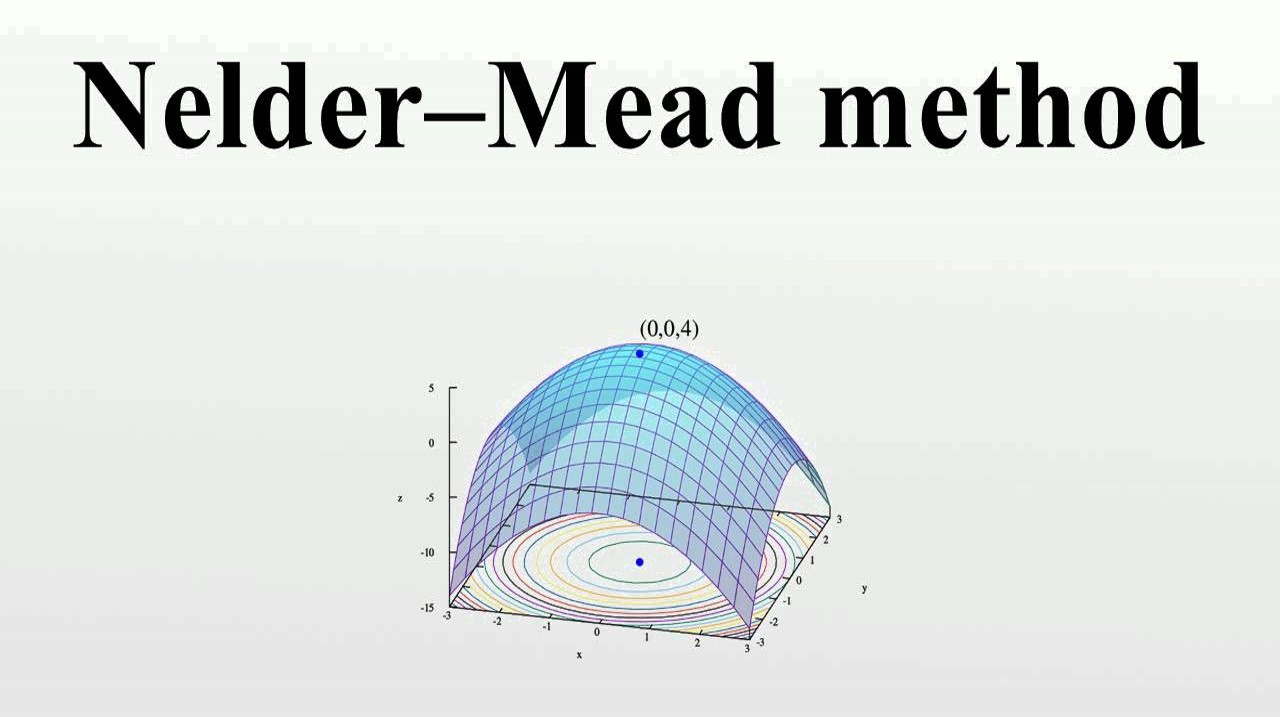

Predictive analytics: Predictive analytics hardware and software process vast volumes of complicated data and create predictions about future event outcomes using machine learning and statistical algorithms. Predictive analytics technologies are used by businesses for fraud detection, marketing, risk assessment, and operations.

Stream analytics: Stream analytics solutions are used to filter, collect, and analyse large amounts of data in a variety of formats and platforms.

Distributed storage Data: Data stored in a distributed storage system that is duplicated, usually on a non-relational database. This can be done to protect against node failures, lost or damaged huge data, or to give low-latency access.

NoSQL databases: There are non-relational data management methods that come in handy when dealing with big amounts of dispersed data. They don't require a set format, making them perfect for unstructured and raw data.

some big data analytics technologies and tools: A data lake,A data warehouse,Knowledge discovery/big data mining ,In-memory data fabric,Data virtualization,Data integration software,Data quality software,Data preprocessing software and Spark.

Big data analytics uses and examples

Here are a few instances of how big data analytics may benefit businesses: Customer acquisition and retention,Targeted ads,Product development,Price optimization,Supply chain and channel analytics,Risk management and Improved decision-making.

Comments

Crapersoft, well knowledged in Bigdata, datamining and iot working environment in coimbatore.

Replygot good website... with advanced technologies...

ReplyI have published my research paper to Scopus at short duration thanks to help of crapersoft.

Reply